Trust, Semiotics, and the Design of an AI Meeting Tool

An AI-powered meeting assistant designed to bridge academic research and practical design, Glyptik transforms complex interactions into seamless, trustworthy meeting experiences.

Project Overview

I set out to design an AI meeting summarization interface that users could trust and use with confidence.

By blending human-computer interaction heuristics with cognitive semiotics, I developed and tested a design framework that improves trust, transparency, and usability in AI-generated summaries.

Impact at a glance

- Built a tested, scalable design framework for AI-generated summaries.

- Improved user trust and usability scores in testing.

- Produced actionable guidelines adaptable to any AI productivity tool.

Built a tested, scalable design framework for AI-generated summaries.

The Challenge

AI meeting summaries are fast but often distrusted. Through early research, I identified three major pain points:

Users doubt accuracy when AI outputs lack explanation.

Ambiguous UI elements lead to misinterpretation.

Lack of feedback causes users to abandon the feature.

Goal

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Donec ullamcorper mattis lorem non. Ultrices praesent amet ipsum justo massa. Eu dolor aliquet risus gravida nunc at feugiat consequat purus. Non massa enim vitae duis mattis. Vel in ultricies vel fringilla.

Research Process

I followed a Double Diamond process infused with academic rigor.

Discover

Reviewed academic literature on semiotics, trust in AI, and usability heuristics.

• Conducted competitor analysis of AI meeting tools (e.g., Zoom, Otter, Microsoft Teams).

• Interviewed participants to uncover trust barriers in AI tools.

Define

Reviewed academic literature on semiotics, trust in AI, and usability heuristics.

• Conducted competitor analysis of AI meeting tools (e.g., Zoom, Otter, Microsoft Teams).

• Interviewed participants to uncover trust barriers in AI tools.

Design Exploration

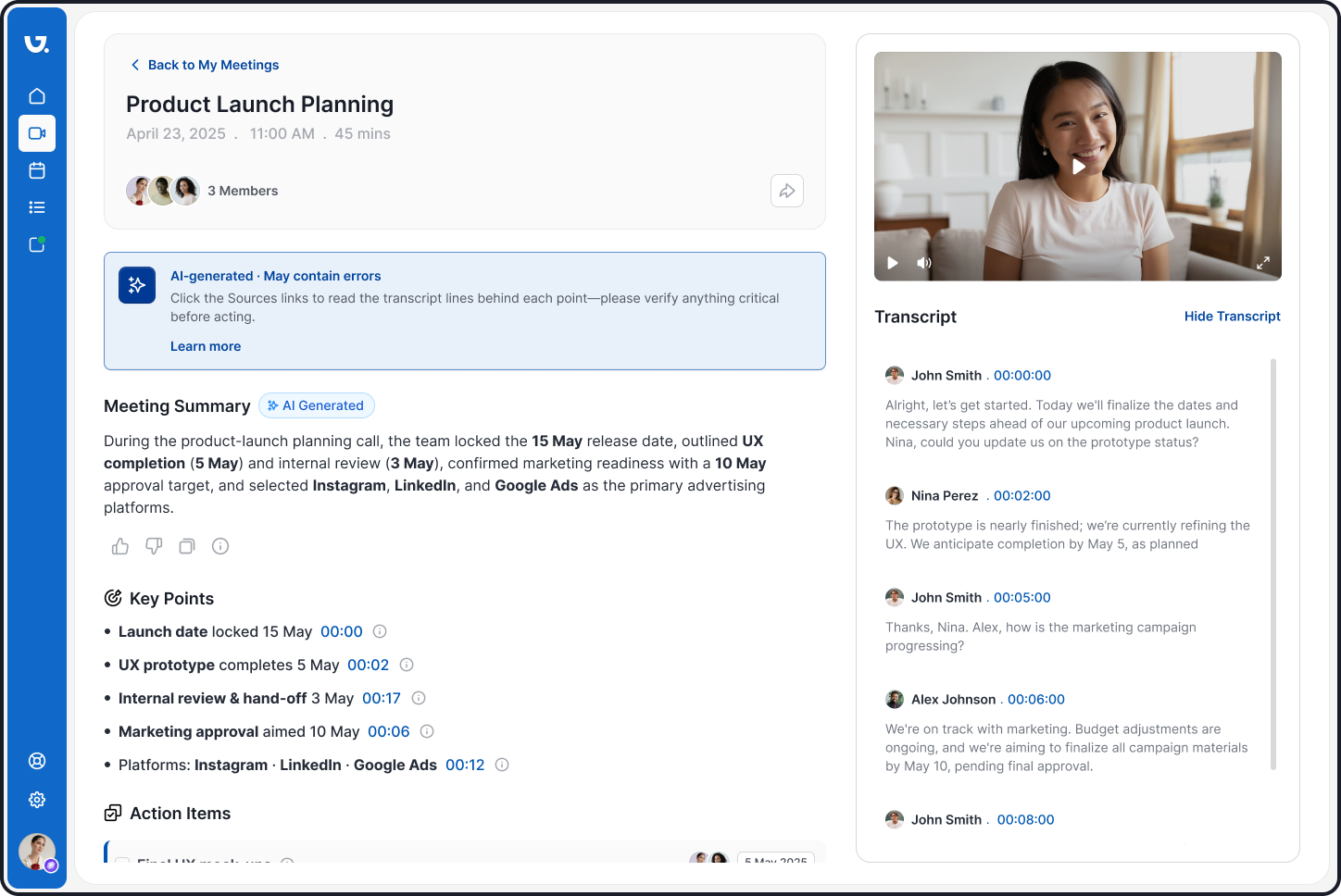

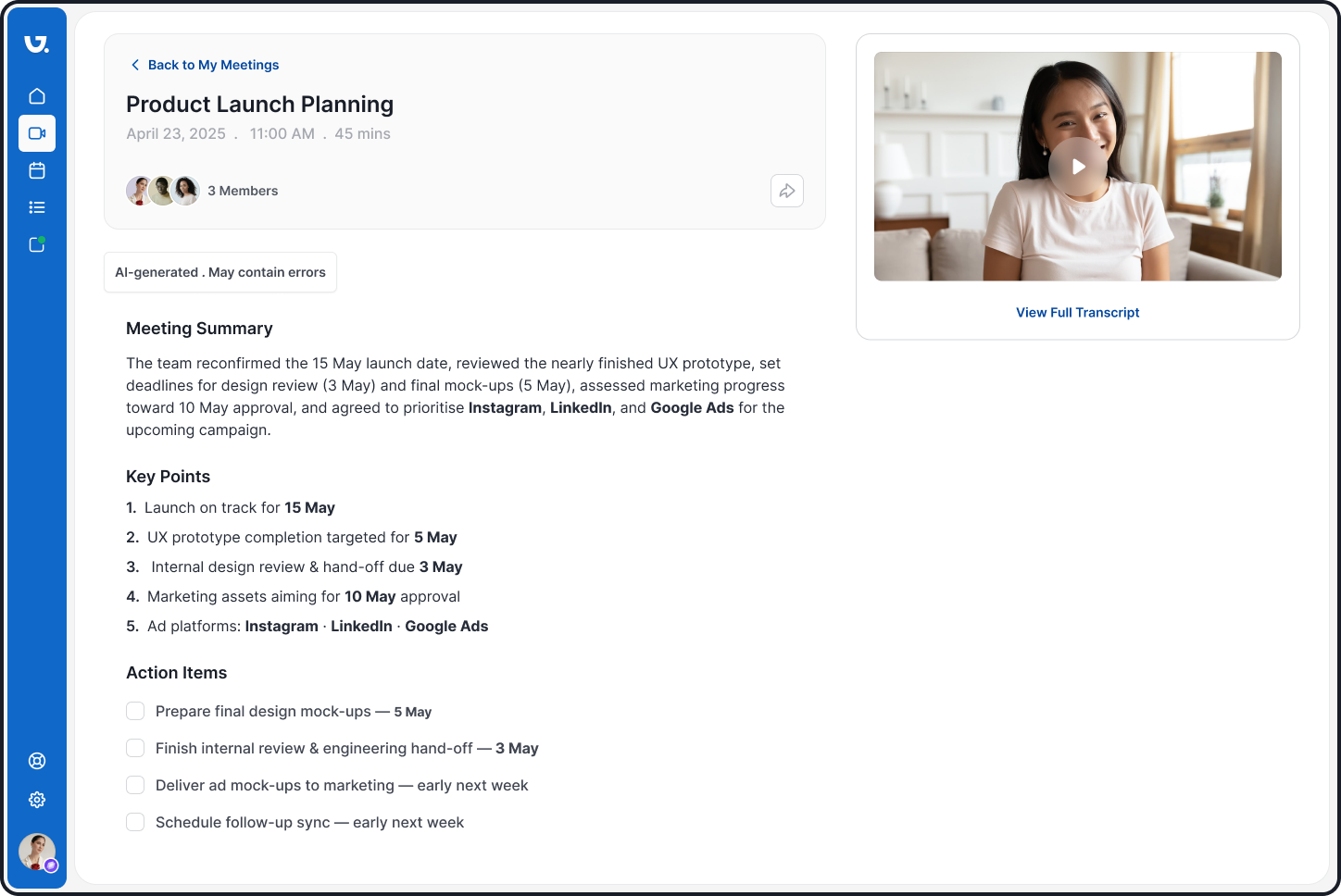

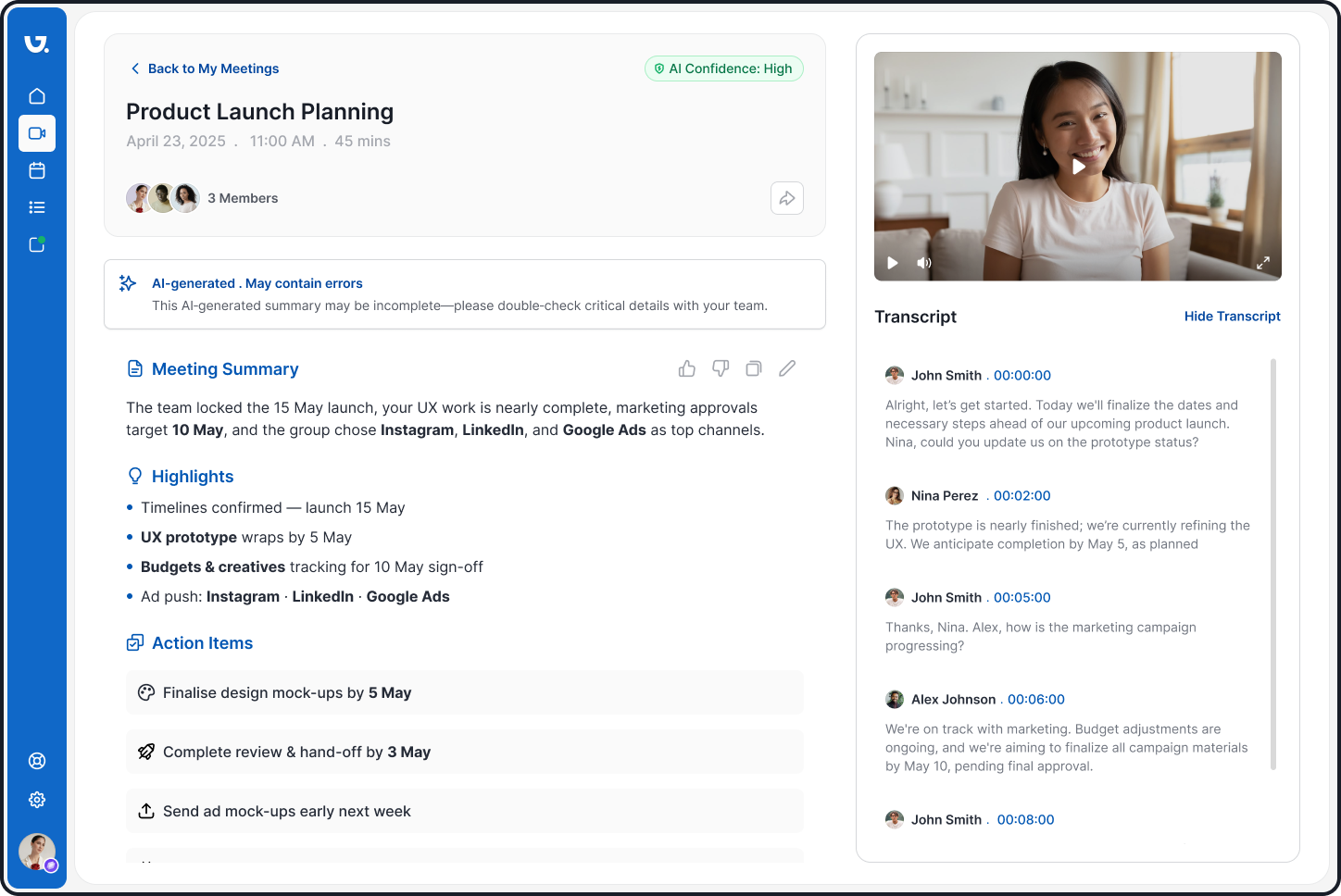

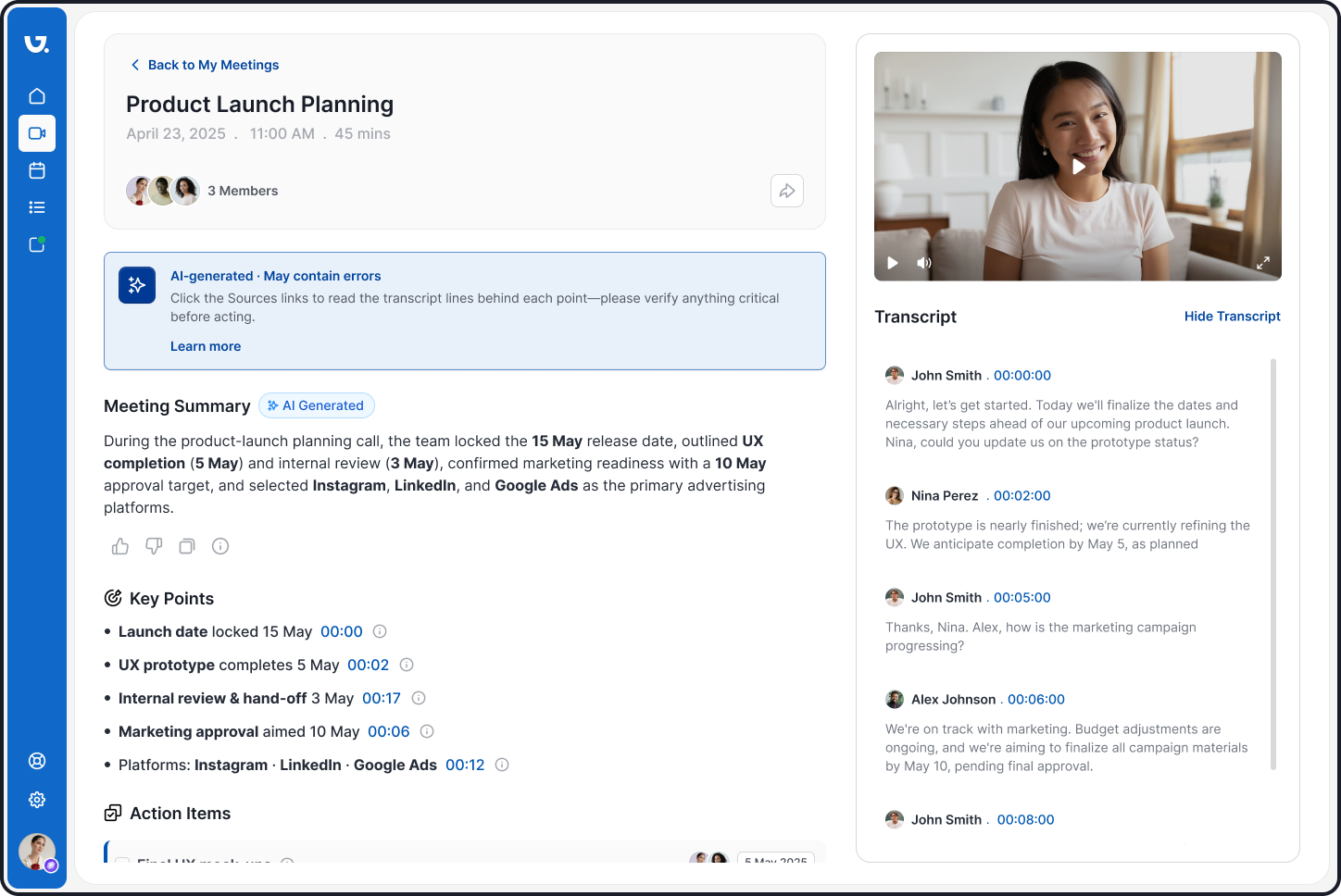

I designed three high-fidelity Figma prototypes, each representing a different semiotic strategy.

Prototype A - Minamilst & Neutral

- Clean and simple.

- Lacked trust cues, leading to skepticism.

Prototype B – Metaphoric & Engaging

- Used humanized tone and metaphorical icons.

- Improved comprehension, but users still wanted transparency.

Prototype C – Transparent & Informative

- Combined visual and linguistic cues.

- Included timestamps, confidence indicators, and source links.

- Scored highest on trust and usability.

Usability Testing

Participants

15 users tested all three prototypes.

Methods

Task completion, observation, post-test interviews.

Results

- 35% increase in trust scores for Prototype C vs A.

- • Faster task completion when verifying summaries.

- • Users expressed higher willingness to adopt the tool long-term.

Trust, Semiotics, and the Design of an AI Meeting Tool

An AI-powered meeting assistant designed to bridge academic research and practical design, Glyptik transforms complex interactions into seamless, trustworthy meeting experiences.

Project Overview

I set out to design an AI meeting summarization interface that users could trust and use with confidence.

By blending human-computer interaction heuristics with cognitive semiotics, I developed and tested a design framework that improves trust, transparency, and usability in AI-generated summaries.

Impact at a glance

- Built a tested, scalable design framework for AI-generated summaries.

- Improved user trust and usability scores in testing.

- Produced actionable guidelines adaptable to any AI productivity tool.

Built a tested, scalable design framework for AI-generated summaries.

The Challenge

AI meeting summaries are fast but often distrusted. Through early research, I identified three major pain points:

Users doubt accuracy when AI outputs lack explanation.

Ambiguous UI elements lead to misinterpretation.

Lack of feedback causes users to abandon the feature.

Goal

Design an interface that communicates clearly, instills trust, and keeps users engaged without slowing them down.

Research Process

I followed a Double Diamond process infused with academic rigor.

Discover

Reviewed academic literature on semiotics, trust in AI, and usability heuristics.

• Conducted competitor analysis of AI meeting tools (e.g., Zoom, Otter, Microsoft Teams).

• Interviewed participants to uncover trust barriers in AI tools.

Define

Reviewed academic literature on semiotics, trust in AI, and usability heuristics.

• Conducted competitor analysis of AI meeting tools (e.g., Zoom, Otter, Microsoft Teams).

• Interviewed participants to uncover trust barriers in AI tools.

Design Exploration

I designed three high-fidelity Figma prototypes, each representing a different semiotic strategy.

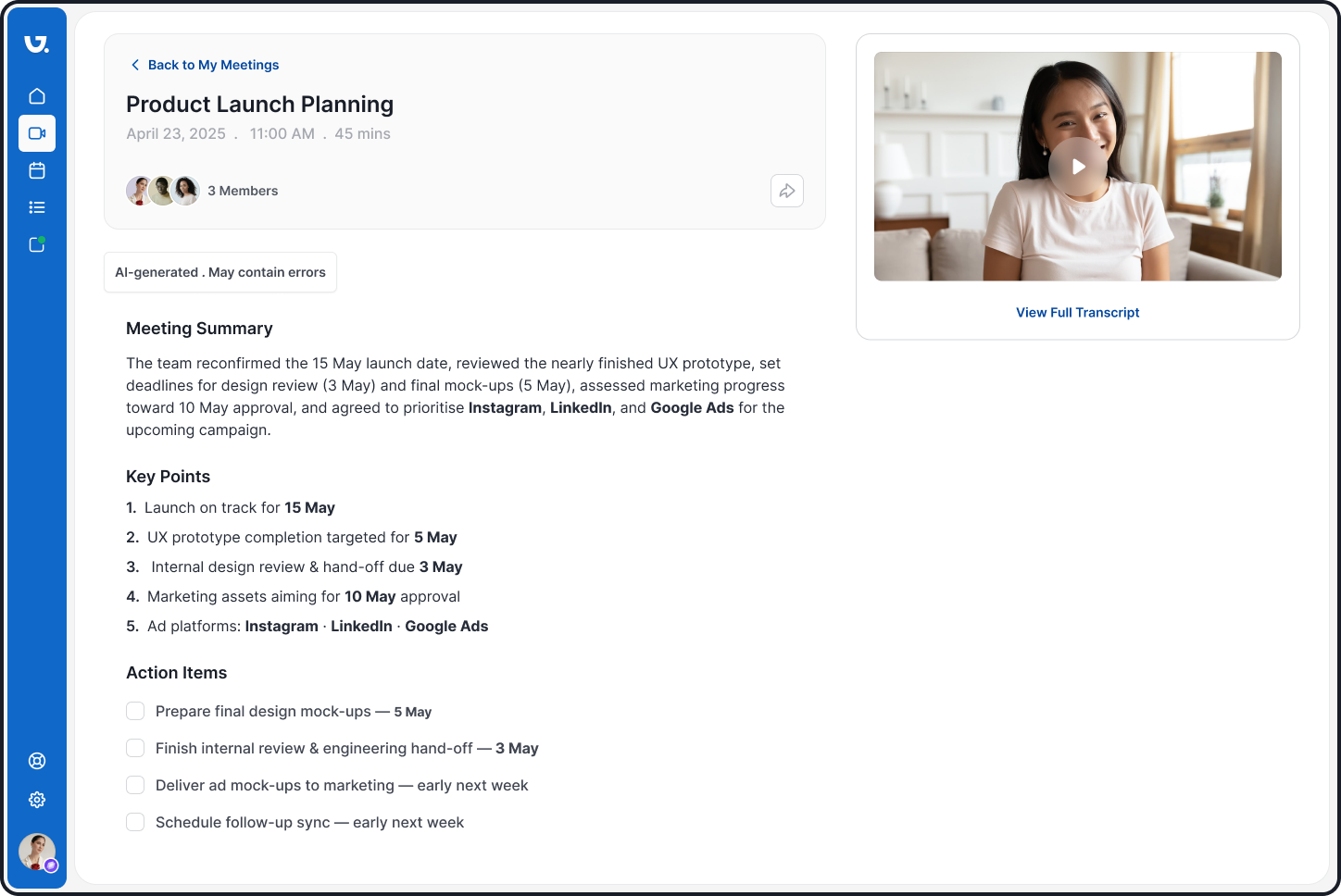

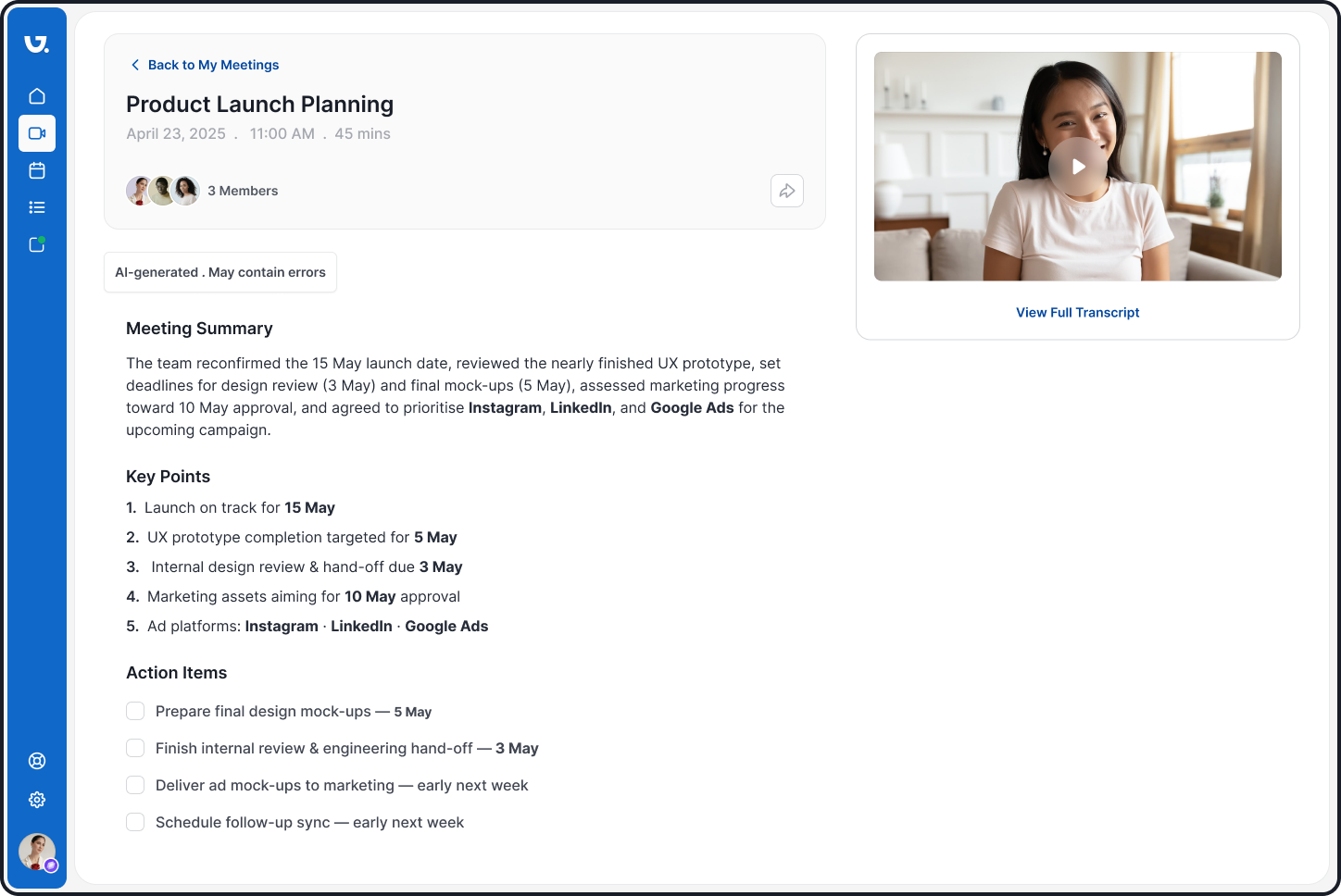

Prototype A - Minamilst & Neutral

- Clean and simple.

- Lacked trust cues, leading to skepticism.

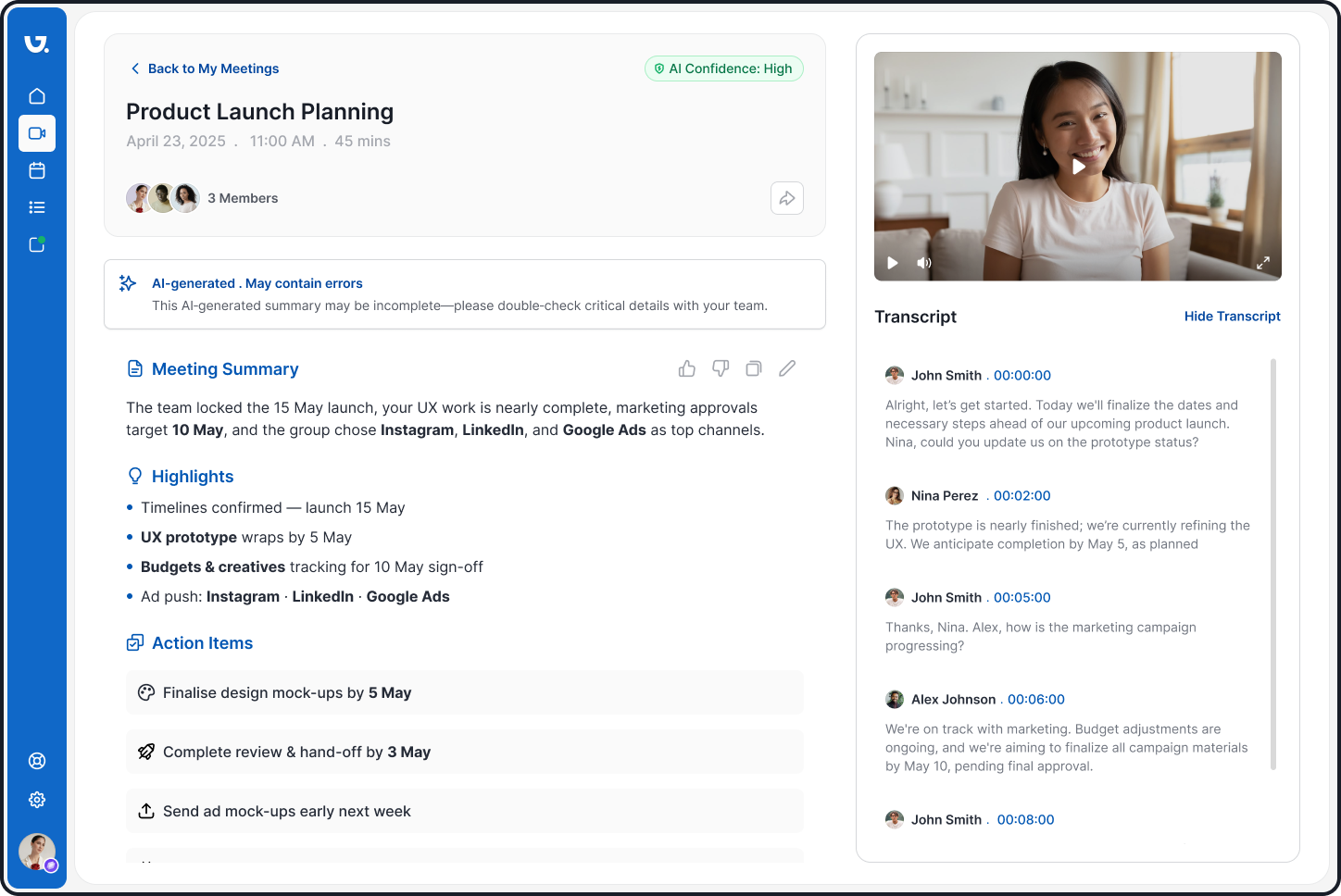

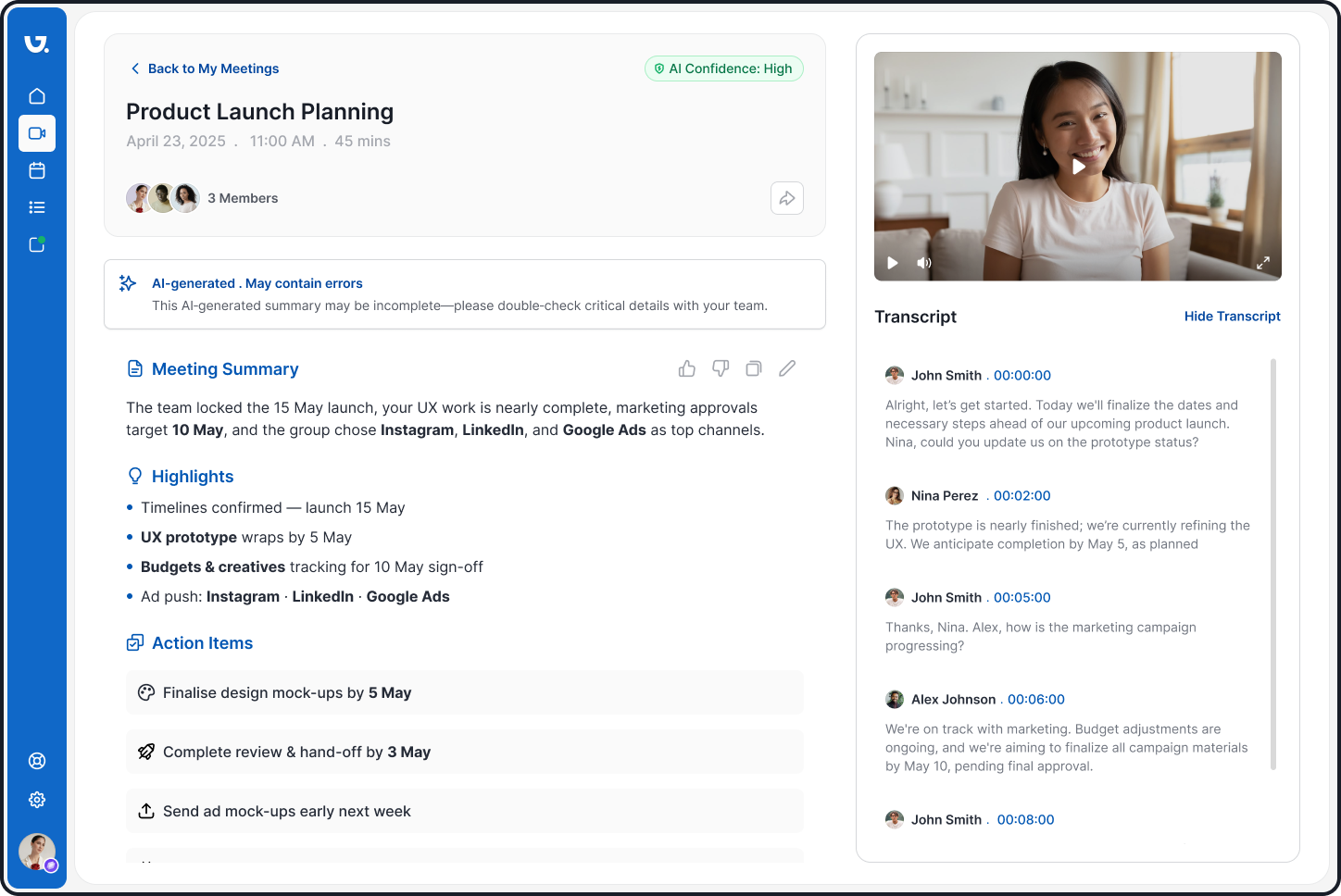

Prototype B – Metaphoric & Engaging

- Used humanized tone and metaphorical icons.

- Improved comprehension, but users still wanted transparency.

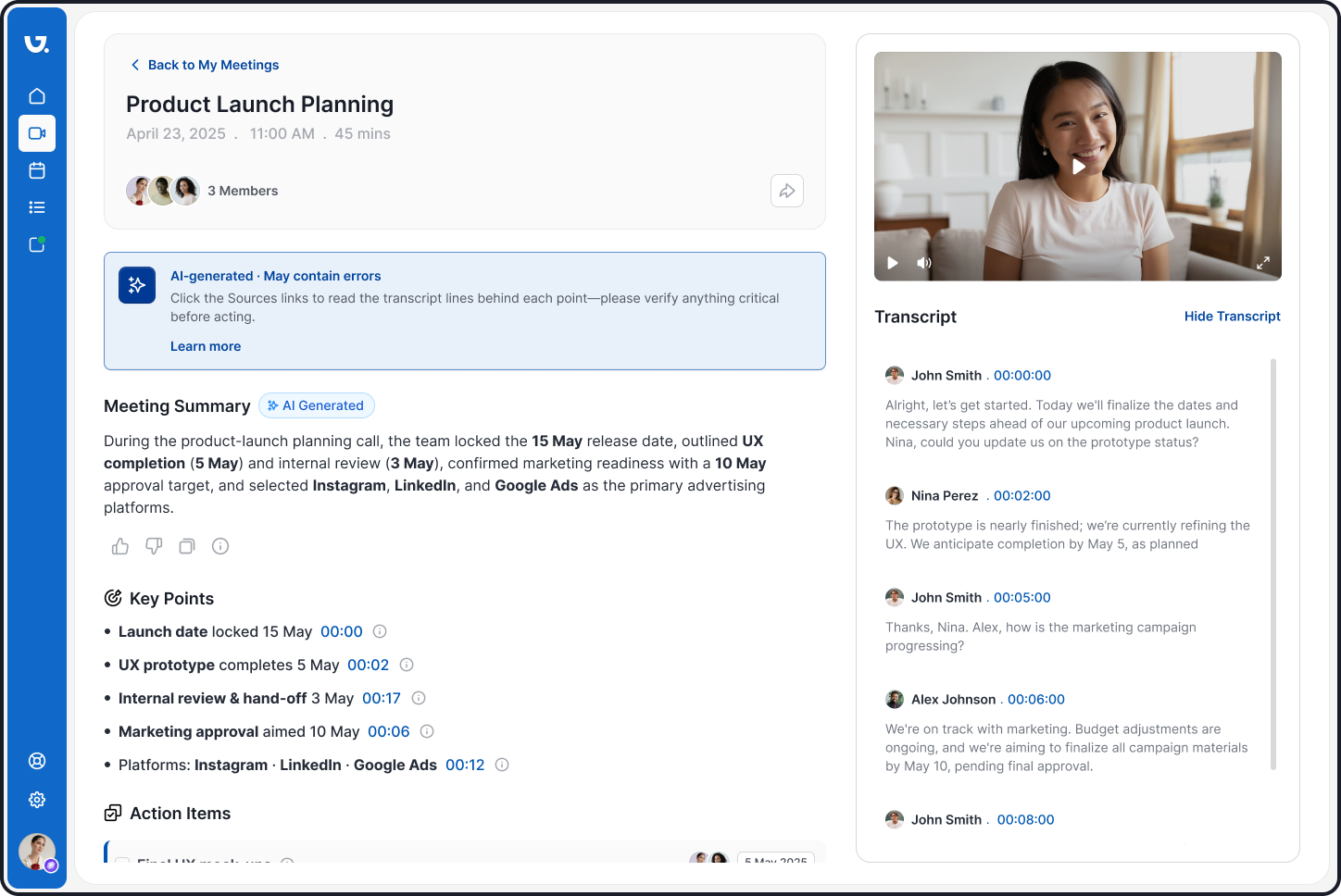

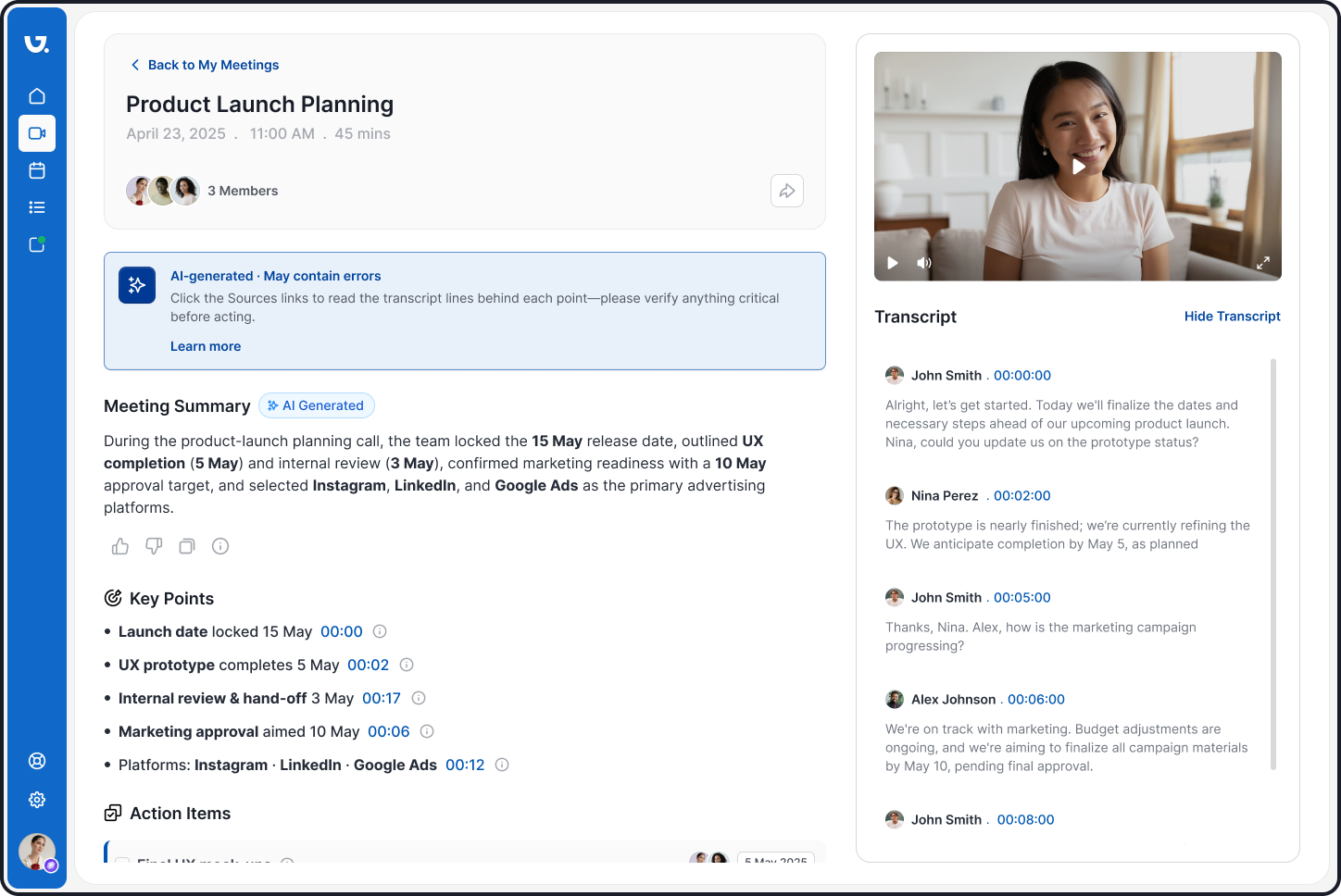

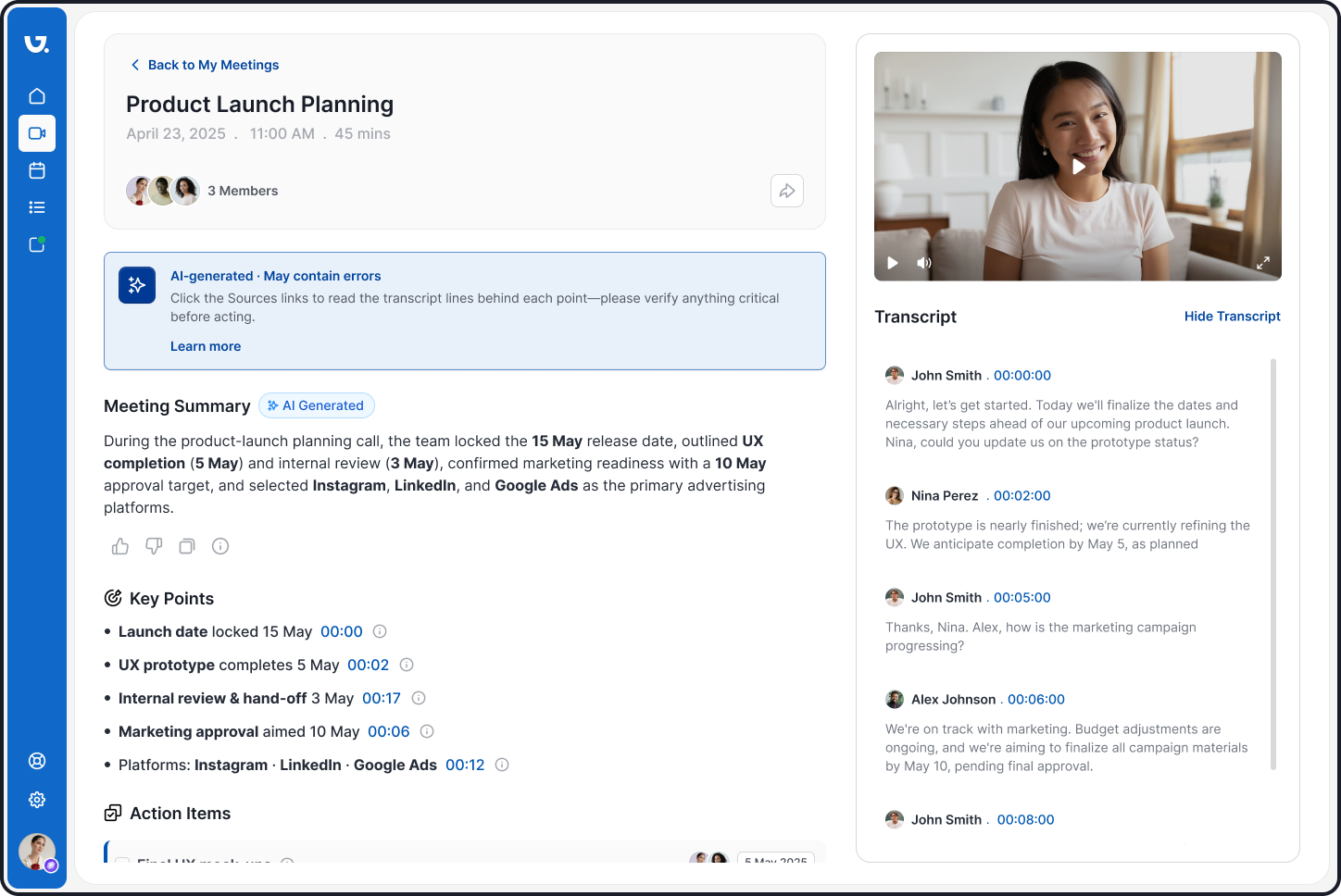

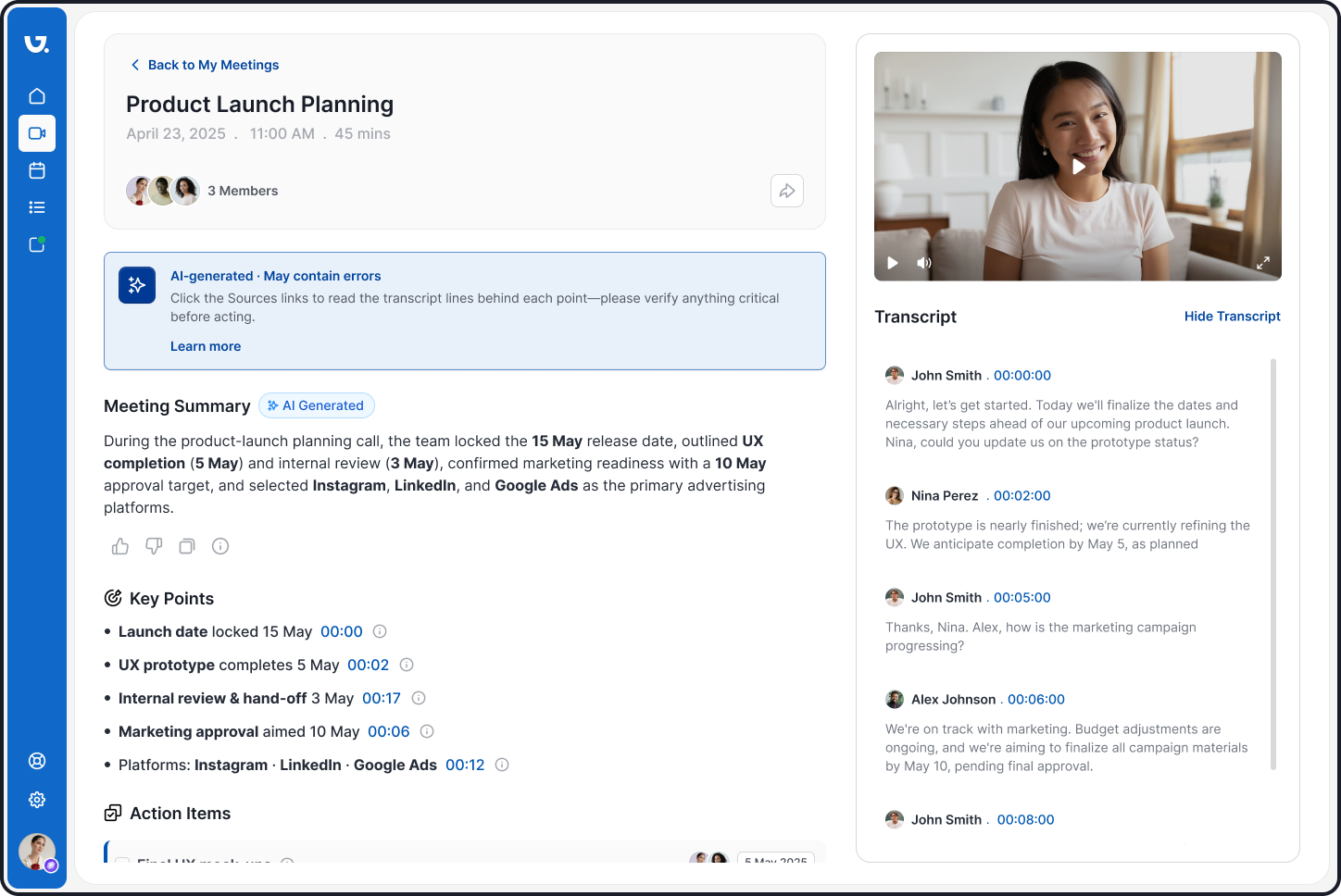

Prototype C – Transparent & Informative

- Combined visual and linguistic cues.

- Included timestamps, confidence indicators, and source links.

- Scored highest on trust and usability.

Usability Testing

Participants

15 users tested all three prototypes.

Methods

Task completion, observation, post-test interviews.

Results

- 35% increase in trust scores for Prototype C vs A.

- • Faster task completion when verifying summaries.

- • Users expressed higher willingness to adopt the tool long-term.

Trust, Semiotics, and the Design of an AI Meeting Tool

An AI-powered meeting assistant designed to bridge academic research and practical design, Glyptik transforms complex interactions into seamless, trustworthy meeting experiences.

Project Overview

I set out to design an AI meeting summarization interface that users could trust and use with confidence.

By blending human-computer interaction heuristics with cognitive semiotics, I developed and tested a design framework that improves trust, transparency, and usability in AI-generated summaries.

Impact at a glance

- Built a tested, scalable design framework for AI-generated summaries.

- Improved user trust and usability scores in testing.

- Produced actionable guidelines adaptable to any AI productivity tool.

The Challenge

AI meeting summaries are fast but often distrusted. Through early research, I identified three major pain points:

Users doubt accuracy when AI outputs lack explanation.

Ambiguous UI elements lead to misinterpretation.

Lack of feedback causes users to abandon the feature.

Goal

Design an interface that communicates clearly, instills trust, and keeps users engaged without slowing them down.

Research Process

I followed a Double Diamond process infused with academic rigor.

Discover

Reviewed academic literature on semiotics, trust in AI, and usability heuristics.

• Conducted competitor analysis of AI meeting tools (e.g., Zoom, Otter, Microsoft Teams).

• Interviewed participants to uncover trust barriers in AI tools.

Define

- Consolidated findings into key blockers: lack of transparency, cold/impersonal language, and unclear validation options.

- Mapped hypotheses:

- Visual cues improve trust.

- Linguistic cues improve usability.

- Combining both yields the highest results.

Design Exploration

I designed three high-fidelity Figma prototypes, each representing a different semiotic strategy.

Prototype A - Minamilst & Neutral

- Clean and simple.

- Lacked trust cues, leading to skepticism.

Prototype B – Metaphoric & Engaging

- Used humanized tone and metaphorical icons.

- Improved comprehension, but users still wanted transparency.

Prototype C – Transparent & Informative

- Combined visual and linguistic cues.

- Included timestamps, confidence indicators, and source links.

- Scored highest on trust and usability.

Usability Testing

Participants

15 users tested all three prototypes.

Methods

Task completion, observation, post-test interviews.

Results

- 35% increase in trust scores for Prototype C vs A.

- • Faster task completion when verifying summaries.

- • Users expressed higher willingness to adopt the tool long-term.